AI for Games

In Level 5, one of the modules focused on creating artificial intelligence for games beyond what we had already done, I had created some basic AI in the past but this was merely pathfinding and basic behaviour trees,

whereas this module was centred more on the specific behaviours for the most part, as well as constructing my own more advanced decision making structures.

The framework given was created in the Unity Engine, however all of the behaviours were written in C# scripts, the engine was there as a way to encapsulate the code in a well presented fashion.

About The Project

Starting out

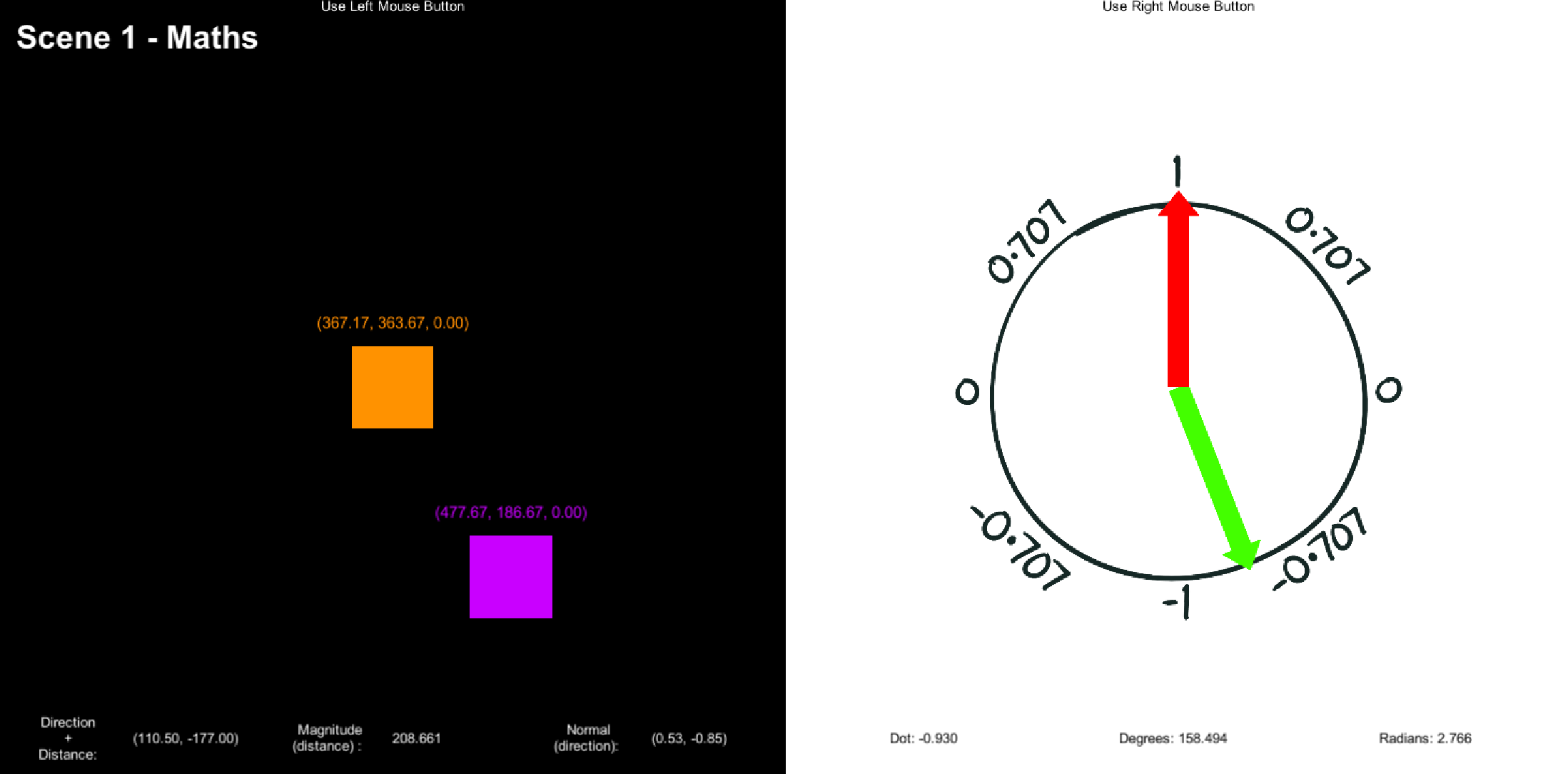

For the first scene, we had a simple visualisation of 2 cubes, measuring relevant information about their distance, the normals etc. and 2 unit vectors, with their cross and dot products. This is important as those calculations form the basis of how many of the behaviours function, the mathematics behind the functions are relatively simple, and I have done them previously, so this was not difficult to implement, but it was important to fully visualise how they were calculated and what they actually represent, in order to properly use them for more advanced operations.

Steering Behaviours

Wander used a number of parameters to randomly move the agent around it's location by taking a random direction to move and adjusting it by a random amount between a given bound to move it in a random, but not overly jittery fashion, much of the debudding of this algorithm came down to tweaking values to give a random enough movement that the AI didn't stray too far too fast, and to ensure it wasn't flittering in opposite directions too unnaturally. After this, the behaviours became more intricate, requiring the development of "feelers" to raycast and determine the position of nearby allies in a given field of view. For group movement, all involved agents then would adjust their position/velocities to account for eachother, moving as a group while maintaining a fair distance from eachother, this was effective for giving an illusion of co-operation, while still allowing enemies to break off from the group if they fall behind or get too close to the player. Whereas collision avoidance allowed the agent to determine which direction is "safest" to move in given where the other agents are currently going. There were some edge cases formed from the gaps between the angles of the feelers, but the more I added, the more computationally expensive it became.

Pathfinding

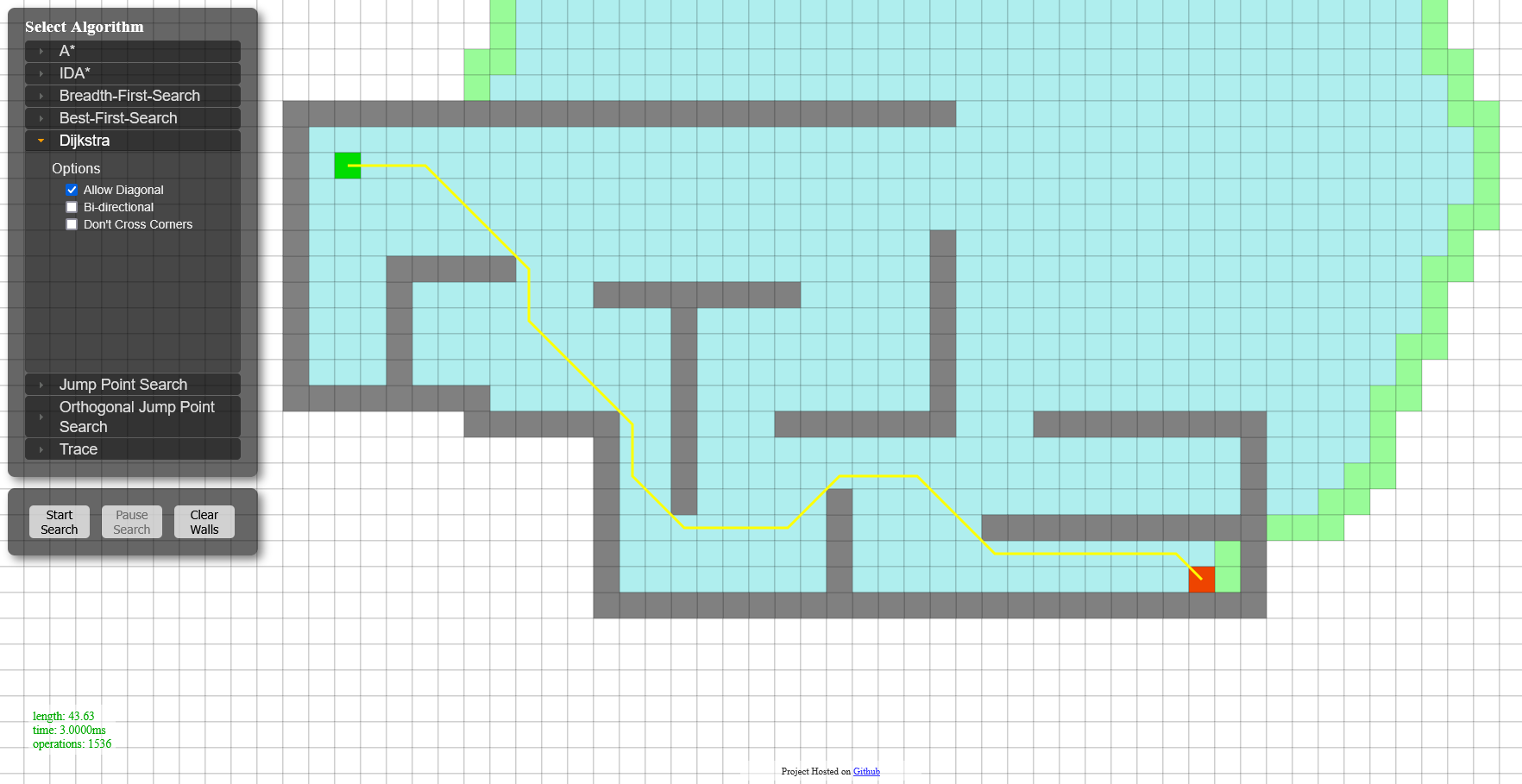

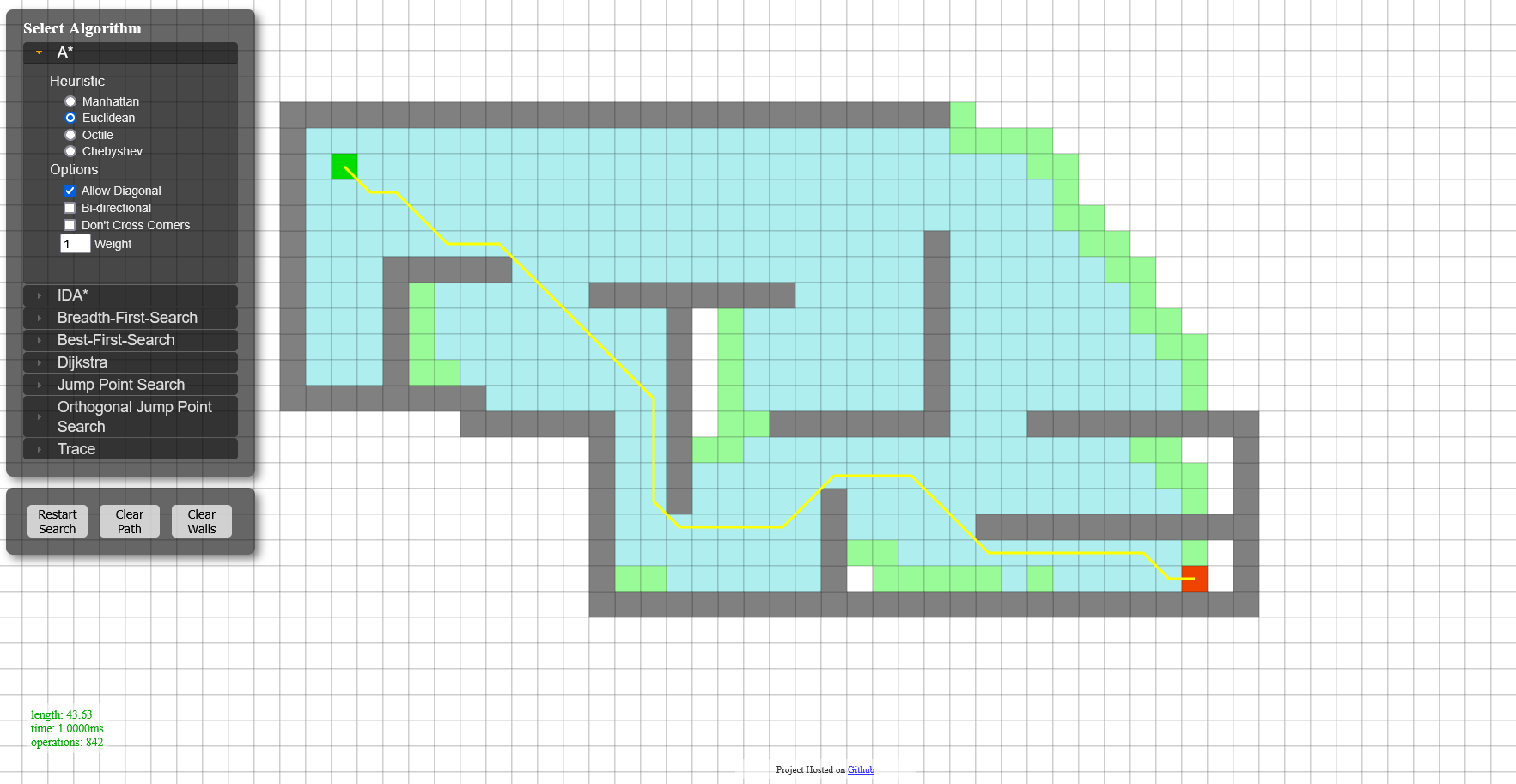

Djikstra's high computrational cost is something largely solved by the next algorithm developed - A-Star (A*). A* works by creating a g-cost value for each node it finds, this being a combination of the current movement distance to get to this node, and an estimate of the distance from the end. This estimate can be figured out in a range of ways that minorly change the way A* will prioritise nodes, the Manhattan distance uses the sum of the distance in each axis, while the Euclidian distance is the direct linear distance between the points. The nodes are explored similarly to Djikstra, but it prioritises the nodes with the lowest g-cost. This always results in a significantly reduced number of nodes that must be assessed. It is much better at handling open spaces and large maps, but still tends to assess a number of unnecessary nodes. There are many optimisations that can be made, such as searching from both the start and endpoints simultaneously, either through alternating or using threads. This was something I implemented in my A* algorithm that led to an increased efficiency since the branches would meet in the middle, completing a path. Another would be precomputing costs around certain parts of the map when the project is compiled, allowing the more complex parts of the map to be compiled faster.

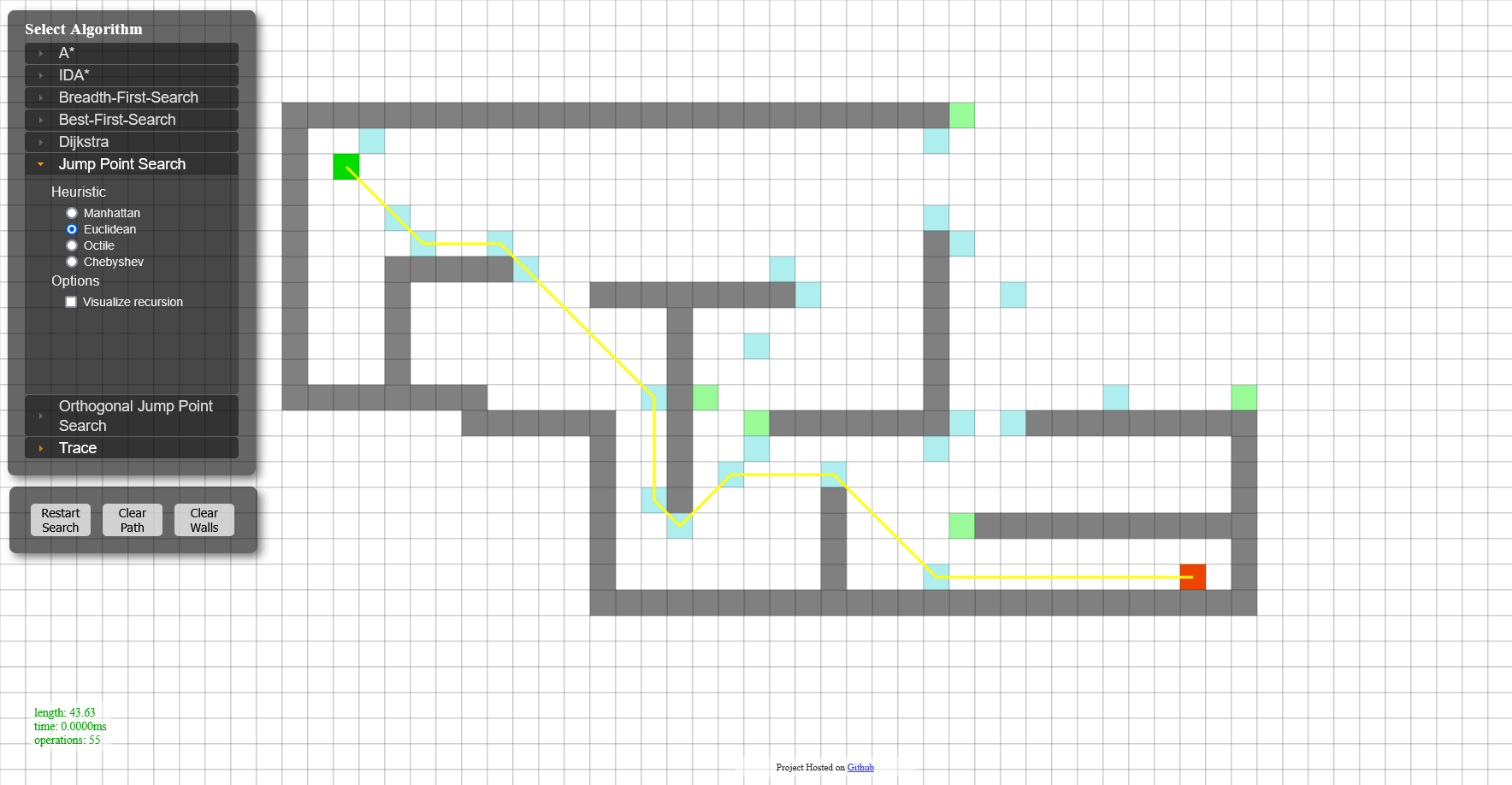

The final algorithm produced was the most optimised, and the one used in the agents for the decision making scene, was Jump Point Search (JPS). JPS is a more advanced algorithm that does not assess each node individually as it explores the map, it instead moves as far as it can in a given direction, if a point of interest is found, it adds it to the list to be assessed, it then moves adjacently to the path directly beside that one and does the same, each point of interest also considers the node it "jumped" from, which is not the one being assessed, but the point in a linear distance from it that was used, for example, if JPS searches any nodes with a greater X and Y value than the currently assessed node, it will move up the Y axis node by node, and check to the right of each of these nodes until it hits a wall, it then checks for interesting points known as forced neighbours and moves up the the next row. interesting points include the end goal, points adjacent to the edge of a barrier, or anything that indicates potential progress in the path. This cuts down on the nodes explored massively, in some cases onyl assessing a handful of different nodes, at it's absolute worst, it is as efficient as A*, and at it's best, can cut down well over 90% of the nodes that need to be explored. Below shows a visual representation of each algorithm's efficiency, generated through a website called pathfinding.js, and shows the sheer difference in speeds.

Decision Making

The final scene used everything created so far to put together a scene with a basic AI agent armed with a pistol that would fight enemy spiders until it died. I built upon this scene with ammo and health pickups, a few different weapons, ammo limits, enemy types etc. I gave the enemies a basic behaiour depending on their type, but for the scene to work properly, the AI agent needed the ability to make many decisions, controlling it's short term goals, movement, behaviour around enemies etc. I used a combination of a behaviour tree and fuzzy logic to allow the AI to flee enemies, attack them and seek towards a pickup of it's choice simultaneously. It ended up being far too strong initially, being basically unkillable. The fuzzy logic gave weightings to certain choices based on different parameters, to combat the performance of the agent, I restricted it's abiliy to respond to enemies to only when it had line of sight, after some value tweaks and balancing, the AI exhibited believable behaviours for dealing with the level. Able to effectively handle waves of enemies, being at low health etc, but not omniscient as to never get caught out from being too confident, or unaware of enemies behind a wall. The image below shows an example of the AI using it's sniper since enemies are far away, and it has enough health that it does not need to worry about ensuring the enemy does not get close.

Overall Thoughts

For the final submission, I was confident that I had achieved the goal of creating some efficient pathfinding algorithms, believable steering behaviours, and an AI agent that makes smart, "human" decisions. I learnt a lot about decision making structures, and JPS was completely new to me, so I am glad I was able to implement it effectively. The complexity of my AI agent was something I was proud of as it relied on a number of advanced decision making techniques working together in an efficient and effective manner. There's some room for further developments if I come back to the project in the future, including adding more game mechanics, or creating a level that pits two teams of these agents against eachother, each with slightly randomised behaviours, I would then give them a variety of objectives and modes to ensure they had fitting behaviours for a variety of situations. I may then explore the concept of machine learning for one of the teams to further push their abilities.

Overall Grade: 1st